tensorflow 연습

from sklearn.datasets import load_iris

data = load_iris()

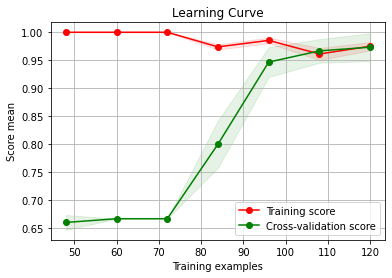

from sklearn.model_selection import learning_curve

from sklearn.neighbors import KNeighborsClassifier

knn = KNeighborsClassifier()

from sklearn.linear_model import LogisticRegression

import numpy as np

np.linspace(0.1,1.1,10, endpoint=False)

array([0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9, 1. ])

train_size, train_score, test_score = learning_curve(LogisticRegression(), data.data, data.target,

train_sizes=np.linspace(0.1,1.1,10, endpoint=False))

C:\Users\sunde\AppData\Roaming\Python\Python39\site-packages\sklearn\linear_model\_logistic.py:814: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\sunde\AppData\Roaming\Python\Python39\site-packages\sklearn\linear_model\_logistic.py:814: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\sunde\AppData\Roaming\Python\Python39\site-packages\sklearn\linear_model\_logistic.py:814: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\sunde\AppData\Roaming\Python\Python39\site-packages\sklearn\linear_model\_logistic.py:814: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\sunde\AppData\Roaming\Python\Python39\site-packages\sklearn\linear_model\_logistic.py:814: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

C:\Users\sunde\AppData\Roaming\Python\Python39\site-packages\sklearn\linear_model\_logistic.py:814: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

Please also refer to the documentation for alternative solver options:

https://scikit-learn.org/stable/modules/linear_model.html#logistic-regression

n_iter_i = _check_optimize_result(

# !pip install -U sklearn-evaluation

import sklearn_evaluation

sklearn_evaluation.plot.learning_curve(train_score, test_score, train_size)

<AxesSubplot:title={'center':'Learning Curve'}, xlabel='Training examples', ylabel='Score mean'>

특징 : 전통적인 ML 기법은 타 프레임워크와 연동하게 !!!

import tensorflow as tf

(X_train,y_train),(X_test,y_test) = tf.keras.datasets.mnist.load_data()

X_train = X_train[...,tf.newaxis] / 255

X_test = X_test[...,tf.newaxis] / 255 # 안해서

y_train = tf.keras.utils.to_categorical(y_train)

import tensorflow_addons as tfa

tfa.metrics.F1Score

from sklearn.metrics import recall_score

input_ = tf.keras.Input((28,28,1))

x = tf.keras.layers.Conv2D(32,3)(input_)

x = tf.keras.layers.ReLU()(x)

x = tf.keras.layers.MaxPool2D()(x)

x = tf.keras.layers.Conv2D(32,3)(x)

x = tf.keras.layers.ReLU()(x)

x = tf.keras.layers.Flatten()(x)

x = tf.keras.layers.Dense(128)(x)

x = tf.keras.layers.ReLU()(x)

x = tf.keras.layers.Dense(10, activation='softmax')(x)

model = tf.keras.Model(input_, x)

model.compile(loss=tf.keras.losses.CategoricalCrossentropy(from_logits=False),

metrics=[tfa.metrics.F1Score(10)]) # F1Score 바로 구할 수 있음

# model.compile(loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True), metrics=[tf.keras.metrics.Precision()])

'''

model.compile(loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=False), # F1Score 쓰려면 음수값 나오면 안되서 False

metrics=[tf.keras.metrics.Precision(), tf.keras.metrics.Recall()]) # Precision, Recall 구하면 F1Score 구할 수 있음

'''

model.summary()

Model: "model_52"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_52 (InputLayer) [(None, 28, 28, 1)] 0

conv2d_102 (Conv2D) (None, 26, 26, 32) 320

re_lu_153 (ReLU) (None, 26, 26, 32) 0

max_pooling2d_50 (MaxPoolin (None, 13, 13, 32) 0

g2D)

conv2d_103 (Conv2D) (None, 11, 11, 32) 9248

re_lu_154 (ReLU) (None, 11, 11, 32) 0

flatten_51 (Flatten) (None, 3872) 0

dense_102 (Dense) (None, 128) 495744

re_lu_155 (ReLU) (None, 128) 0

dense_103 (Dense) (None, 10) 1290

=================================================================

Total params: 506,602

Trainable params: 506,602

Non-trainable params: 0

_________________________________________________________________

history = model.fit(X_train,y_train,epochs=10)

Epoch 1/10

1875/1875 [==============================] - 7s 4ms/step - loss: 0.3393 - f1_score: 0.9537

Epoch 2/10

1875/1875 [==============================] - 7s 3ms/step - loss: 0.0788 - f1_score: 0.9804

Epoch 3/10

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0681 - f1_score: 0.9846

Epoch 4/10

1875/1875 [==============================] - 7s 3ms/step - loss: 0.0592 - f1_score: 0.9862

Epoch 5/10

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0552 - f1_score: 0.9877

Epoch 6/10

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0514 - f1_score: 0.9892

Epoch 7/10

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0501 - f1_score: 0.9901

Epoch 8/10

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0493 - f1_score: 0.9904

Epoch 9/10

548/1875 [=======>......................] - ETA: 4s - loss: 0.0424 - f1_score: 0.9918

---------------------------------------------------------------------------

KeyboardInterrupt Traceback (most recent call last)

Input In [122], in <cell line: 1>()

----> 1 history = model.fit(X_train,y_train,epochs=10)

File C:\ProgramData\Anaconda3\lib\site-packages\keras\utils\traceback_utils.py:64, in filter_traceback.<locals>.error_handler(*args, **kwargs)

62 filtered_tb = None

63 try:

---> 64 return fn(*args, **kwargs)

65 except Exception as e: # pylint: disable=broad-except

66 filtered_tb = _process_traceback_frames(e.__traceback__)

File C:\ProgramData\Anaconda3\lib\site-packages\keras\engine\training.py:1384, in Model.fit(self, x, y, batch_size, epochs, verbose, callbacks, validation_split, validation_data, shuffle, class_weight, sample_weight, initial_epoch, steps_per_epoch, validation_steps, validation_batch_size, validation_freq, max_queue_size, workers, use_multiprocessing)

1377 with tf.profiler.experimental.Trace(

1378 'train',

1379 epoch_num=epoch,

1380 step_num=step,

1381 batch_size=batch_size,

1382 _r=1):

1383 callbacks.on_train_batch_begin(step)

-> 1384 tmp_logs = self.train_function(iterator)

1385 if data_handler.should_sync:

1386 context.async_wait()

File C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\util\traceback_utils.py:150, in filter_traceback.<locals>.error_handler(*args, **kwargs)

148 filtered_tb = None

149 try:

--> 150 return fn(*args, **kwargs)

151 except Exception as e:

152 filtered_tb = _process_traceback_frames(e.__traceback__)

File C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\eager\def_function.py:915, in Function.__call__(self, *args, **kwds)

912 compiler = "xla" if self._jit_compile else "nonXla"

914 with OptionalXlaContext(self._jit_compile):

--> 915 result = self._call(*args, **kwds)

917 new_tracing_count = self.experimental_get_tracing_count()

918 without_tracing = (tracing_count == new_tracing_count)

File C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\eager\def_function.py:947, in Function._call(self, *args, **kwds)

944 self._lock.release()

945 # In this case we have created variables on the first call, so we run the

946 # defunned version which is guaranteed to never create variables.

--> 947 return self._stateless_fn(*args, **kwds) # pylint: disable=not-callable

948 elif self._stateful_fn is not None:

949 # Release the lock early so that multiple threads can perform the call

950 # in parallel.

951 self._lock.release()

File C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\eager\function.py:2956, in Function.__call__(self, *args, **kwargs)

2953 with self._lock:

2954 (graph_function,

2955 filtered_flat_args) = self._maybe_define_function(args, kwargs)

-> 2956 return graph_function._call_flat(

2957 filtered_flat_args, captured_inputs=graph_function.captured_inputs)

File C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\eager\function.py:1853, in ConcreteFunction._call_flat(self, args, captured_inputs, cancellation_manager)

1849 possible_gradient_type = gradients_util.PossibleTapeGradientTypes(args)

1850 if (possible_gradient_type == gradients_util.POSSIBLE_GRADIENT_TYPES_NONE

1851 and executing_eagerly):

1852 # No tape is watching; skip to running the function.

-> 1853 return self._build_call_outputs(self._inference_function.call(

1854 ctx, args, cancellation_manager=cancellation_manager))

1855 forward_backward = self._select_forward_and_backward_functions(

1856 args,

1857 possible_gradient_type,

1858 executing_eagerly)

1859 forward_function, args_with_tangents = forward_backward.forward()

File C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\eager\function.py:499, in _EagerDefinedFunction.call(self, ctx, args, cancellation_manager)

497 with _InterpolateFunctionError(self):

498 if cancellation_manager is None:

--> 499 outputs = execute.execute(

500 str(self.signature.name),

501 num_outputs=self._num_outputs,

502 inputs=args,

503 attrs=attrs,

504 ctx=ctx)

505 else:

506 outputs = execute.execute_with_cancellation(

507 str(self.signature.name),

508 num_outputs=self._num_outputs,

(...)

511 ctx=ctx,

512 cancellation_manager=cancellation_manager)

File C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\eager\execute.py:54, in quick_execute(op_name, num_outputs, inputs, attrs, ctx, name)

52 try:

53 ctx.ensure_initialized()

---> 54 tensors = pywrap_tfe.TFE_Py_Execute(ctx._handle, device_name, op_name,

55 inputs, attrs, num_outputs)

56 except core._NotOkStatusException as e:

57 if name is not None:

KeyboardInterrupt:

import pandas as pd

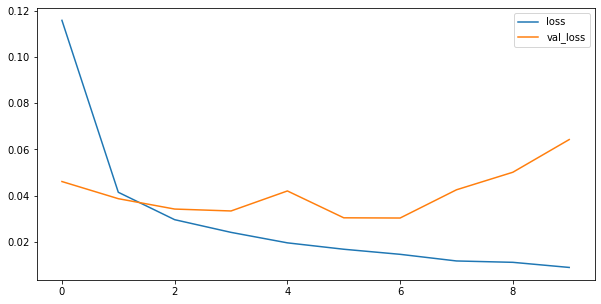

pd.DataFrame(history.history).plot.line(figsize=(10,5))

<AxesSubplot:>

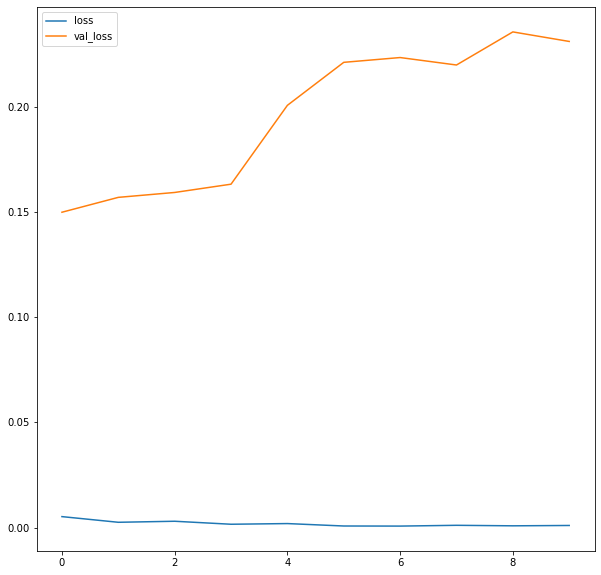

pd.DataFrame(history.history).plot.line(figsize=(10,10)) # pooling (x)

<AxesSubplot:>

함수형 패러다임 >

- 반복 실험

- 함수형 연동하기 위해서

def build_fn():

input_ = tf.keras.Input((28,28,1))

x = tf.keras.layers.Conv2D(32,3)(input_)

x = tf.keras.layers.ReLU()(x)

x = tf.keras.layers.MaxPool2D()(x)

x = tf.keras.layers.Conv2D(32,3)(x)

x = tf.keras.layers.ReLU()(x)

x = tf.keras.layers.Flatten()(x)

x = tf.keras.layers.Dense(128)(x)

x = tf.keras.layers.ReLU()(x)

x = tf.keras.layers.Dense(10)(x)

model = tf.keras.Model(input_, x)

model.compile(loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['acc'])

return model

from tensorflow.keras.wrappers.scikit_learn import KerasClassifier

model = KerasClassifier(build_fn, epochs=5) # scikit-learn 과 연동할 수 있는 텐서플로우 모델

C:\Users\sunde\AppData\Local\Temp\ipykernel_16740\964211621.py:1: DeprecationWarning: KerasClassifier is deprecated, use Sci-Keras (https://github.com/adriangb/scikeras) instead. See https://www.adriangb.com/scikeras/stable/migration.html for help migrating.

model = KerasClassifier(build_fn, epochs=5) # scikit-learn 과 연동할 수 있는 텐서플로우 모델

train_size, train_score, test_score = learning_curve(model, X_train, y_train, cv=2)

Epoch 1/5

94/94 [==============================] - 1s 5ms/step - loss: 0.6436 - acc: 0.7917

Epoch 2/5

94/94 [==============================] - 0s 3ms/step - loss: 0.1913 - acc: 0.9387

Epoch 3/5

94/94 [==============================] - 0s 3ms/step - loss: 0.1150 - acc: 0.9640

Epoch 4/5

94/94 [==============================] - 0s 3ms/step - loss: 0.0614 - acc: 0.9800

Epoch 5/5

94/94 [==============================] - 0s 3ms/step - loss: 0.0351 - acc: 0.9890

938/938 [==============================] - 2s 2ms/step - loss: 0.1415 - acc: 0.9600

94/94 [==============================] - 0s 2ms/step - loss: 0.0135 - acc: 0.9960

Epoch 1/5

305/305 [==============================] - 1s 3ms/step - loss: 0.3258 - acc: 0.8969

Epoch 2/5

305/305 [==============================] - 1s 3ms/step - loss: 0.0934 - acc: 0.9715

Epoch 3/5

305/305 [==============================] - 1s 3ms/step - loss: 0.0579 - acc: 0.9807

Epoch 4/5

305/305 [==============================] - 1s 3ms/step - loss: 0.0371 - acc: 0.9881

Epoch 5/5

305/305 [==============================] - 1s 3ms/step - loss: 0.0221 - acc: 0.9925

938/938 [==============================] - 2s 2ms/step - loss: 0.0746 - acc: 0.9792

305/305 [==============================] - 1s 2ms/step - loss: 0.0088 - acc: 0.9976

Epoch 1/5

516/516 [==============================] - 2s 3ms/step - loss: 0.2496 - acc: 0.9224

Epoch 2/5

516/516 [==============================] - 2s 3ms/step - loss: 0.0737 - acc: 0.9767

Epoch 3/5

516/516 [==============================] - 2s 3ms/step - loss: 0.0455 - acc: 0.9860

Epoch 4/5

516/516 [==============================] - 2s 3ms/step - loss: 0.0305 - acc: 0.9907

Epoch 5/5

516/516 [==============================] - 2s 3ms/step - loss: 0.0204 - acc: 0.9936

938/938 [==============================] - 2s 2ms/step - loss: 0.0622 - acc: 0.9844

516/516 [==============================] - 1s 2ms/step - loss: 0.0079 - acc: 0.9976

Epoch 1/5

727/727 [==============================] - 3s 3ms/step - loss: 0.1988 - acc: 0.9391

Epoch 2/5

727/727 [==============================] - 2s 3ms/step - loss: 0.0660 - acc: 0.9794

Epoch 3/5

727/727 [==============================] - 2s 3ms/step - loss: 0.0414 - acc: 0.9862

Epoch 4/5

727/727 [==============================] - 2s 3ms/step - loss: 0.0293 - acc: 0.9919

Epoch 5/5

727/727 [==============================] - 2s 3ms/step - loss: 0.0210 - acc: 0.9935

938/938 [==============================] - 2s 2ms/step - loss: 0.0583 - acc: 0.9844

727/727 [==============================] - 1s 2ms/step - loss: 0.0115 - acc: 0.9958

Epoch 1/5

938/938 [==============================] - 3s 3ms/step - loss: 0.1644 - acc: 0.9489

Epoch 2/5

938/938 [==============================] - 3s 3ms/step - loss: 0.0521 - acc: 0.9832

Epoch 3/5

938/938 [==============================] - 3s 3ms/step - loss: 0.0351 - acc: 0.9891

Epoch 4/5

938/938 [==============================] - 3s 3ms/step - loss: 0.0255 - acc: 0.9926

Epoch 5/5

938/938 [==============================] - 3s 3ms/step - loss: 0.0190 - acc: 0.9944

938/938 [==============================] - 2s 2ms/step - loss: 0.0629 - acc: 0.9834

938/938 [==============================] - 2s 2ms/step - loss: 0.0174 - acc: 0.9943

Epoch 1/5

94/94 [==============================] - 1s 4ms/step - loss: 0.5808 - acc: 0.8187

Epoch 2/5

94/94 [==============================] - 0s 4ms/step - loss: 0.1993 - acc: 0.9417

Epoch 3/5

94/94 [==============================] - 0s 4ms/step - loss: 0.1137 - acc: 0.9657

Epoch 4/5

94/94 [==============================] - 0s 3ms/step - loss: 0.0763 - acc: 0.9767

Epoch 5/5

94/94 [==============================] - 0s 4ms/step - loss: 0.0448 - acc: 0.9863

938/938 [==============================] - 2s 2ms/step - loss: 0.1672 - acc: 0.9527

94/94 [==============================] - 0s 2ms/step - loss: 0.0271 - acc: 0.9920

Epoch 1/5

305/305 [==============================] - 1s 3ms/step - loss: 0.3221 - acc: 0.8996

Epoch 2/5

305/305 [==============================] - 1s 3ms/step - loss: 0.1005 - acc: 0.9695

Epoch 3/5

305/305 [==============================] - 1s 3ms/step - loss: 0.0605 - acc: 0.9819

Epoch 4/5

305/305 [==============================] - 1s 3ms/step - loss: 0.0376 - acc: 0.9886

Epoch 5/5

305/305 [==============================] - 1s 3ms/step - loss: 0.0277 - acc: 0.9916

938/938 [==============================] - 2s 2ms/step - loss: 0.0866 - acc: 0.9778

305/305 [==============================] - 1s 2ms/step - loss: 0.0104 - acc: 0.9962

Epoch 1/5

516/516 [==============================] - 2s 3ms/step - loss: 0.2285 - acc: 0.9305

Epoch 2/5

516/516 [==============================] - 2s 3ms/step - loss: 0.0712 - acc: 0.9782

Epoch 3/5

516/516 [==============================] - 2s 3ms/step - loss: 0.0421 - acc: 0.9876

Epoch 4/5

516/516 [==============================] - 2s 3ms/step - loss: 0.0270 - acc: 0.9915

Epoch 5/5

516/516 [==============================] - 2s 3ms/step - loss: 0.0199 - acc: 0.9945

938/938 [==============================] - 2s 2ms/step - loss: 0.0706 - acc: 0.9822

516/516 [==============================] - 1s 2ms/step - loss: 0.0093 - acc: 0.9972

Epoch 1/5

727/727 [==============================] - 3s 3ms/step - loss: 0.1980 - acc: 0.9400

Epoch 2/5

727/727 [==============================] - 2s 3ms/step - loss: 0.0616 - acc: 0.9808

Epoch 3/5

727/727 [==============================] - 2s 3ms/step - loss: 0.0386 - acc: 0.9879

Epoch 4/5

727/727 [==============================] - 2s 3ms/step - loss: 0.0257 - acc: 0.9923

Epoch 5/5

727/727 [==============================] - 2s 3ms/step - loss: 0.0174 - acc: 0.9949

938/938 [==============================] - 2s 2ms/step - loss: 0.0631 - acc: 0.9852

727/727 [==============================] - 1s 2ms/step - loss: 0.0088 - acc: 0.9977

Epoch 1/5

938/938 [==============================] - 3s 3ms/step - loss: 0.1621 - acc: 0.9503

Epoch 2/5

938/938 [==============================] - 3s 3ms/step - loss: 0.0535 - acc: 0.9842

Epoch 3/5

938/938 [==============================] - 3s 3ms/step - loss: 0.0337 - acc: 0.9898

Epoch 4/5

938/938 [==============================] - 3s 3ms/step - loss: 0.0237 - acc: 0.9925

Epoch 5/5

938/938 [==============================] - 3s 3ms/step - loss: 0.0181 - acc: 0.9944

938/938 [==============================] - 2s 2ms/step - loss: 0.0538 - acc: 0.9859

938/938 [==============================] - 2s 2ms/step - loss: 0.0088 - acc: 0.9976

train_score

array([[0.99599999, 0.99199998],

[0.99764103, 0.99620515],

[0.99757576, 0.99715149],

[0.99582797, 0.99772042],

[0.99426669, 0.99756664]])

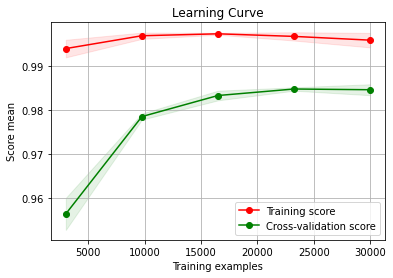

sklearn_evaluation.plot.learning_curve(train_score, test_score, train_size)

<AxesSubplot:title={'center':'Learning Curve'}, xlabel='Training examples', ylabel='Score mean'>

Lasso > L1

Ridge > L2

ElasticNet

CNN 도 linear 기 때문에 L1, L2 사용할 수 있다.

과적합(Overfitting)을 막는 방법

- train data 가 많은 것(확실하다)

- Regularizer 사용

- Drop out : catastrophic forgetting 을 줄여주는 역할도 함, ensemble 효과있음

- Batch Normalizer 사용

- Model Checkpoint / Early Stopping

tensorflow overfitting

- tf.keras.regularizers.L1L2

- tf.keras.regularizers.l1_l2

- tf.keras.layers.Dropout

- tf.keras.callbacks.EarlyStopping

- tf.keras.callbacks.LearningRateScheduler

- tf.keras.callbacks.ReduceLROnPlateau